Are we close to the Metaverse [Storytime Saturdays]

Evaluating the Research published by Meta to figure out how much progress Meta has made towards their goals.

To learn more about the newsletter, check our detailed About Page + FAQs

To help me understand you better, please fill out this anonymous, 2-min survey. If you liked this post, make sure you hit the heart icon in this email.

Recommend this publication to Substack over here

Take the next step by subscribing here. Share this post with someone who would benefit.

I’m sure y’all have been waiting for this one.

I got a really good reception on the Metaverse article. I’m here to follow up with an amazing part 2. In this article, I will look into all the results published by Meta. This will help us discern how close Meta is to the Metaverse in at least a base form. This will be a follow-up from yesterday’s post, where I cover why the Metaverse is a great business decision. To get the most out of this post, make sure you’ve read that-

Let’s get funky.

Key Highlights

The aspects needed to make the Metaverse work- There are a few different parts to create the MVP of the Metaverse. We’d need the actual engineering for the Ar/AR stuff to be at least visually immersive. The platform would have to be easy for everyone to use. We’d need real-time translation of languages spoken throughout the world. We want systems to detect harmful content to ensure that the platforms are not misused. Our glasses must be robust to be able to detect items in a variety of contexts (a huge problem in ML). And our solutions need to be adaptable and able to quickly learn new patterns.

What this requires- This requires a lot of Data Analysis and Machine Learning to make it work. As you can see, there are a lot of object classification/detection tasks. There is a lot of physical engineering required as well, but many of those problems have been largely solved.

What we will cover- By looking at the various publications done by Meta in the various domains. While I will be referencing technical research, no real technical background is required. For those of you interested in my technical research, make sure you check out my Medium, LinkedIn, and YouTube profiles. Links at the end.

My conclusion- Based on the paper results, I’m pretty optimistic about the future. I’ll let you draw your own conclusions after reading through the analysis. I’d love to hear your thoughts.

For more such high-quality analysis, consider getting a premium subscription to this newsletter. Premium subs get access to special articles to skyrocket their carer, prepare for their interviews, network with people, and much more.

The Aspects of the Metaverse

In his talk, Introducing Meta, Mark Zuckerberg talked about how Technology was meant to allow people “To connect with anyone, to teleport anywhere”. The Metaverse is supposed to be a series of universes, where one would switch between office worker, gamer, and real-estate tycoon based on their requirements. Much of the metaverse will probably play out similarly to an open-world MMORPG to allow people to interact with each other. Even to get the basic version, this will require handling the following components working-

The Hardware- Without the correct hardware, there is no way for people to interact with Metaverse. We’ve already seen reasonably functional smart glasses. The headsets sold by Oculus have mostly positive reviews. We even have VR/AR websites being coded on the internet. I played around with the tools a bit, and the progress is scary. A non web-dev like me could create a basic VR website (you can see it here). Meta has put a lot of money into React to make it work with the VR language choice for all kinds of platforms.

Computer Vision- A lot of the Metaverse will be visual. Whether it’s object classification/detection on Smart Glasses, ensuring no harmful images/assets are uploaded onto the platforms, or ensuring that the Metaverse is able to work with images/uploads of differing qualities, Computer Vision will be a large part of the experience.

Language Processing- Zuck wants me to be able to smoothly communicate with someone in Saudi Arabia, Zimbabwe, and Korea. Remember, the Metaverse becomes more valuable with more users. To make the most of the Metaverse, we will need real-time language translation.

Information Summary, Searching, and Verification- We will need the ability to search through large corpora of Metaverses to find one to match our needs. With all the verification, we would also need a lot of tools to make verification and fact-checking easier. And the ability to summarize all the information stored on this platform.

Cost-Effectiveness- The Metaverse will operate on a scale that makes the Internet look like a Baby. Remember, Metaverse will have a lot of 3D videos, which requires insane amounts of storage and processing power. It will also need to be a system that adapts to new data quickly without being too expensive.

Easy Content Generation/Personalization- The internet is powerful because there are many many tools that allow people to generate content easily. The Metaverse needs to allow people to do the same.

For 1, there are a lot of people reviewing the various products. There isn’t much more value I can add there. Let’s cover Meta’s progress in other avenues.

Computer Vision

Computer Vision refers to the field of study that focuses on making Machines see. This involves detecting objects, classifying images, and being able to sort through trillions of uncurated, messy, potentially low-quality images. As a primarily visual platform, Metaverse needs CV extensively.

Enter, their work with SEER 10B: Better, fairer computer vision through self-supervised learning on diverse datasets. It has 10 billion dense parameters, to achieve insane results in Computer Vision tasks across the board. There is a large emphasis on training on globally diverse datasets as seen in graphic below-

Meta’s emphasis on Data Diversity can be summed up by this quote-

In particular, advancing computer vision is an important part of building the Metaverse. For example, to build AR glasses that can guide you to your misplaced keys or show you how to make a favorite recipe, we will need machines that understand the visual world as people do. They will need to work well in kitchens not just in Kansas and Kyoto but also in Kuala Lumpur, Kinshasa, and myriad other places around the world. This means recognizing all the different variations of everyday objects like house keys or stoves or spices. SEER breaks new ground in achieving this robust performance.

Meta has also made other strides in this domains of CV. Add to this their work in Deepfake Detection (Creating a dataset and a challenge for deepfakes)and their efforts to push new learning paradigms (Yann LeCun on a vision to make AI systems learn and reason like animals and humans) to help their AI agents catch any malicious behavior, and you see that a lot of the baseline for Vision tasks has been done. We’ll cover some of their other work in the other sections.

Language Processing

To maximize the Metaverse experience we will need a lot of work in NLP. You’d need real-time translation of the thousands of variants of languages spoken throughout the world. Meta’s No Language Left Behind Initiative gained a lot of attention in this regard. Or look at their papers Teaching AI to translate 100s of spoken and written languages in real-time or Pseudo labeling: Speech recognition using multilingual unlabeled data. All focused on achieving this goal.

Meta AI is announcing a long-term effort to build language and MT tools that will include most of the world’s languages. This includes two new projects. The first is No Language Left Behind, where we are building a new advanced AI model that can learn from languages with fewer examples to train from, and we will use it to enable expert-quality translations in hundreds of languages, ranging from Asturian to Luganda to Urdu. The second is Universal Speech Translator, where we are designing novel approaches to translating from speech in one language to another in real time so we can support languages without a standard writing system as well as those that are both written and spoken.

Meta recently proved their chops with a real-time language system for Hokkien. This bodes well for the future.

What about Language Safety? Metaverse would not be a great platform if it was filled with hate-speech and misinformation. Enter their extremely powerful few-shot learner that can quickly detect new kinds of harmful content with only a few samples in training- Harmful content can evolve quickly. Our new AI system adapts to tackle it.

And of course, we have the Large Language Models, which we will cover in the upcoming sections.

There is another element on Language Process required for the Metaverse. Since the Metaverse is looking to become the internet of AR/VR, it will require amazing search functionality. If you have a lot of time, refer to these 3 primary sources. Meta’s announcement post, Introducing Sphere: Meta AI’s web-scale corpus for better knowledge-intensive NLP, their Github, and their paper- The Web Is Your Oyster — Knowledge-Intensive NLP against a Very Large Web Corpus. To those of you that don’t have the time/expertise to read through all those papers, don’t worry. I have something for you.

If you want an explanation of how this will work, without going through all those sources, check out my breakdown of this technology in my article- Meta AI declares war on Google.

All of these are very cool, and prove the feasibility. However, it means nothing if these systems are too expensive. This is where the next bit comes in.

Meta’s Research into Efficient Learning

Improving your Machine Learning training pipelines is a great way to improve your performance a lot without using extremely expensive models. In their paper, “A ConvNet for the 2020s”, they modernize the pipelines for CV to match the state-of-the-art transformers. This allows them to retain the simplicity of ConvNets while gaining peak performance. You can read my summary of the paper here.

Recently, Meta AI expanded on this by creating an efficient way to pick samples for model training. As with all things, training AI models on data has diminishing returns. Eventually, new samples don’t have as much additional information to add. Most big data involves throwing heaps of data to hit new levels. Meta took a different approach, coming up with an automated way to intelligently pick the best samples.

Such vastly superior scaling would mean that we could go from 3% to 2% error by only adding a few carefully chosen training examples, rather than collecting 10x more random ones

- Read more about how they did this here. Yes I was being cheeky with the title for that article.

All of this lays out a solid foundation. These are the barebones required to make this a viable platform. However, to truly make the Metaverse a powerhouse, it needs one final element. The internet only really make revolutionary, when it allowed everyone to start interacting with it/sharing their stuff. The Metaverse becomes a success with the masses if non-technical can create and share their content/expertise in their preferred way. Has Meta made any progress in that avenue?

Content Generation and Personalization

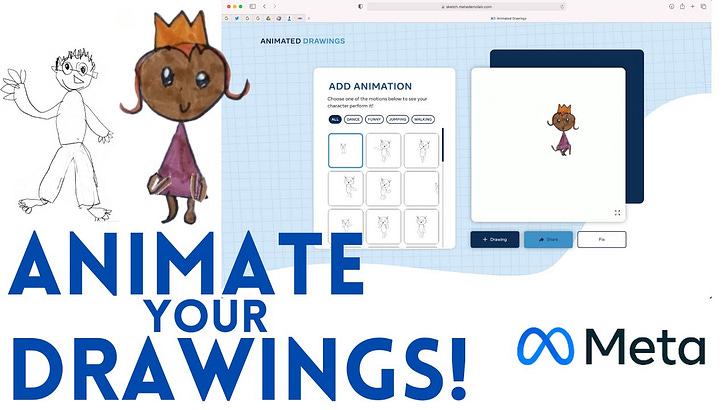

Here are a few milestones that seem interesting- Meta’s Children Drawing Animator (Using AI to Animate Children’s Drawings) allows people to upload singular sketches and see them get animated. Take a look below.

Want something for a Zoom meeting/your content? Creating better virtual backdrops for video calling, remote presence, and AR has you covered. And Meta’s Make-A-Video caught the attention of the mainstream media.

These examples might seem silly at times, but these point to something amazing. Meta has made amazing progress in the various avenues required to make the Metaverse work. Given the resources they have available, and how much money is being poured into Tech in general, it is likely that they will have good results.

Obviously, these technologies are not perfect. I have a lot of content that goes over their weakness (such as this article where I show that Neural Networks are bad for tabular data). But I am optimistic over the direction of this solution. As I’ve already covered in my article yesterday, the Metaverse is a good business decision. It makes sense. And Meta has the resources to make it work. Given all their progress, prospects look strong for it. I’ll let you take a look at all the resources and make up your mind.

Loved the post? Hate it? Want to talk to me about your crypto portfolio? Get my predictions on the UCL or MMA PPVs? Want an in-depth analysis of the best chocolate milk brands? Reach out to me by replying to this email, in the comments, or using the links below.

Stay Woke. I’ll see you living the dream.

Go kill all,

Devansh <3

Reach out to me on:

Instagram: https://www.instagram.com/iseethings404/

Message me on Twitter: https://twitter.com/Machine01776819

My LinkedIn: https://www.linkedin.com/in/devansh-devansh-516004168/

My content:

Read my articles: https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Get a free stock on Robinhood. No risk to you, so not using the link is losing free money: https://join.robinhood.com/fnud75

So, to answer the question in the title, it sounds like the answer is "we are a long way off, but are slowly chipping away at the requirements." Sort of like self driving cars.