Is Big Tech using Data Laundering to cheat artists?[Storytime Saturdays]

How Stable Diffusion, MidJourney, DALLE, Meta, and other generators hide behind hype, non-profits, and creative accounting to not pay artists

As I was working through different research for my upcoming article on AI art, I came across something very concerning- Data Laundering. Big Tech firms like Meta, Google, OpenAI, and Stability are using it to get around copyright laws to create Deep Learning based Text-Image generators. By doing so, they are cheating artists out of their rightful compensation. I was skeptical of these claims at first, but after looking into this, I thought this was a huge issue. Big enough that I decided to postpone my original AI art article and write this first. I will cover this discussion in-depth because as Leaders of the future, this is a discussion you must be familiar with. The Tech industry is moving towards the trend of large-scale data scraping, and this has a lot of perspectives that you must consider.

In this article, I will cover Data Laundering, how Big Names in Machine Learning are getting around copyright, and their double standards around different arts. This is a very nuanced issue that covers intellectual property and copyright. Through this article, I hope to raise more awareness about this issue, so that you have a better understanding of this issue. Towards the end, I will leave you with a question- should visual artists be compensated more for their contributions to these diffusion-based models?

Key Highlights

What is Data Laundering- Data Laundering is the conversion of stolen data so that it may be sold or used by ostensibly legitimate databases.

How Big Tech Data Launders artist data- They create/fund non-profits to create these datasets/models for “research purposes”. This allows them to get around the copyright. The models created then are shared with the For-Profit companies, which monetize it into APIs. These APIs are sold to other people/groups.

The Big Tech Double Standard- When I first heard about this, I did think of fair use. Technically these models create something new, so they should be protected by fair use. However, I learned that Stability was creating Diffusion based models for music as well. Unlike with StableDiffusion, this model uses no copyrighted data. It can’t be a coincidence that the models avoid copyrighted material from an industry that has much better lawyers.

The counter-argument- People on the other side have argued that music is a lot easier to replicate than art (I’m skeptical of this). Thus, using LLMs to train on copyrighted songs would be more likely to create similar enough pieces. Thus, Stability avoids music and not art (again, I’m skeptical).

The Latent Space Debate- Technically, these models learn the latent space of the images, not the images themselves. Thus how much of this is really stealing vs ‘inspiration’?

Want to learn more about this? Read on. This is definitely the most important article I’ve written because it involves people’s livelihoods and the future of AI. Whether you’re an artist whose work might potentially fuel these works, a developer who might (often unknowingly) use people’s work without permission, or a business bro trying to understand this industry to build the next thing, this discussion is one that you should know about. Hopefully, this can generate/add to the discussion around this issue.

What is Data Laundering

Data Laundering involves transforming stolen Data so that it can be used for legitimate purposes (like selling or use in a legit dataset). This can involve many steps and is going to become a bigger problem as the use of Data in society increases.

As with other forms of data theft, data harvested from hacked databases is sold on darknet sites. However, instead of selling to identity thieves and fraudsters, data is sold into legitimate competitive intelligence and market research channels.

-Source- ZDnet, Cyber-criminals boost sales through ‘data laundering’

In the case of Big Tech companies and AI art, the process plays out like this-

Create/Fund a non-profit entity to create the datasets for you. The non-profit, research-oriented nature of these entities allows them to use copyrighted material more easily.

This Dataset can now be used by big tech for monetary reasons.

Think, I’m making things up? Think back to Stable Diffusion. Who created it? Many people think it’s Stability AI. You’re wrong. It was created by the Ludwig Maximilian University of Munich, with a donation from Stability. Look at the Github of Stable Diffusion to see for yourself

Stable Diffusion is a latent text-to-image diffusion model. Thanks to a generous compute donation from Stability AI and support from LAION, we were able to train a Latent Diffusion Model on 512x512 images from a subset of the LAION-5B database.

So the non-profit creates the Dataset/Model, and the company decides to monetize it. A pretty clean way to handle copyright.

To a certain degree, this is normal. Lots of companies fund research in universities and then use those insights for better commercialization. It’s cheaper than having an in-house R&D wing. The debate isn’t around what is going on. It’s about where you should draw the line. Keep that in mind as you read. Unlike a lot of other commentators, I’m not someone who will screech about this being terrible and a new kind of evil. I think that’s a mix of misunderstanding and sensationalism. This is in many ways a logical extension of what is being done. However, I think it’s important to have a conversation about this subject. How far is too far? When should we step in and involve the interests of people who have contributed indirectly? It’s important to at least think about these issues. If you don’t engage in the conversation, someone else will decide the rules for you.

With that out of the way, let’s move on to something pretty damning. Let’s talk about the double standard between how Tech companies treat music and art.

The ML Double Standard

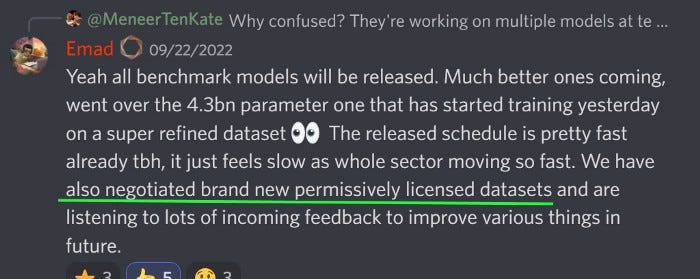

Stability AI is also creating another Diffusion Model- Dance Diffusion. This is the Stable Diffusion but for music. What’s interesting about the dataset is that it has no copyrighted material.This double standard made me write this article in the first place. Instead of me writing about it, you can read their words-

Two things strike me as interesting. First is their naked acknowledgment that the difference in datasets is created by a difference in copyright standards. The argument that music is easier to memorize to me doesn’t entirely make sense, because, with enough diversity, you should be able to generate a diverse latent space. You should also be able to bias the model towards the non-copyrighted songs if it really becomes a problem. What allows people to use copyrighted art freely, but makes music impossible to touch? However, even assuming their statement to be completely true and unfixable, there is something about the wording that is very interesting. Read the following sentence-

The unavoidable controversy of AI image generators replacing traditional digital artists is not lost on Harmonai; this is why its focus is on making the art of music production more accessible to all with its powerful generative AI-driven tools, rather than overtaking the whole music production process.

And compare it with the following statement-

Stable Diffusion is a text-to-image model that will empower billions of people to create stunning art within seconds. It is a breakthrough in speed and quality meaning that it can run on consumer GPUs.

-From StableDiffusion release

SD has been marketed as a way to replace artists, where everyone will be able to create the art they desire. Meanwhile, DD will only work to assist production. DDs writeup explicitly mentions the worry about humans being replaced, while SD doesn’t even mention artists (great catch by Stephen there).

I find it interesting that there is such a distinct difference between the two fields. If music can be memorized more easily, that also means that the whole production should be easier to replace. Why not create a tool to do that? Why is visual art being replaced but music not? The only difference that stands out to me is the difference between the lobbies of the two fields. Music has had a much stronger legal presence.

So far, I’ve painted a mostly negative picture (see what I did there). However, there is a justification that is given for this double standard, and for why Visual Artists aren’t being paid for these generators. Let’s cover them next.

When it comes to music, things are even more aggressive in the realm of copyrights. Keep copyrighted material out of the AI model if you ever want a realistic change for it to be used for anything worth a damn. Even Weird Al licenses all of the songs and material he parodies — even if he could argue transformative work — he just can’t be bothered to go to court for it. — This discussion, on Stability’s subreddit, is great

The Counter Arguments

There are two major points that big Tech has used to justify the status quo. The first pertains to why there is such a different standard for music and visual art. There are two major justifications for this-

Music is much easier to replicate- The worry is that music has a smaller range of outputs, so it will be more likely to churn out music that already resembles creations. OpenAI’s Jukebox (mentioned earlier) is already remarkably good at mimicking the feel of an artist. However, I am slightly skeptical of this. JukeBox has very descriptive prompts (rap in the style of Tupac). The use of diverse inputs, different prompts, and encouraging exploration in the model should result in more compliant outputs.

The difference in Copyright Standards- Different industries have different tolerances. Stability as a company will try to get away with as much as it can. And on this point, I completely agree. I think the important discussion is not whether this move is legal, but whether this is ethical. Artists pour a lot of effort into their work. It’s not a stretch to say that they should be compensated for their effort.

How this should be dealt with is something I will leave up to you. Stability raised 101 Million Dollars in Funding. With the billions being poured into train obscenely large models, there is some budget to pay the artists what they are owed. Do you agree? Let me know in the comments.

Moving on to the next argument, this is the more overarching conversation to be had. Technically, these models embed representations into latent space and use that to generate inputs. The models don’t copy as much as take inspiration from the images. If I decided to create Goku-like character after looking at DBZ, do I owe money to Akira Toriyama? Do all the anime creators pay royalties to their inspirations? No, to both. Should this be any different for large models, which are essentially just sampling a data pool of inspirations to create their outputs?

This question becomes murkier given that Art has historically rewarded people that ‘steal’ from others. To quote this BBC article on the subject,

Pablo Picasso (“good artists copy; great artists steal”) could never have painted his breakthrough works of the 1900s without recourse to African sculpture.

To me, this boils down to a fundamental question- What do you value more, helping people or having a consistent philosophical principle? Any law/approach that protects intellectual property draws the line somewhere? Where should this line be drawn? This is a question I don’t have an answer to. I’d love to hear your thoughts in the comments.

Hopefully, this will give you more perspective on this issue. Such conversations are important in helping us create more responsible solutions for the future. With how interconnected the world is, there is a high chance of unintended consequences when one solution is applied in a context it wasn’t trained to handle. Having such conversations with a diverse crowd is key to ensuring that the solutions work to help all parties, instead of pulling one down to help another.

Loved the post? Hate it? Want to talk to me about your crypto portfolio? Get my predictions on the UCL or MMA PPVs? Want an in-depth analysis of the best chocolate milk brands? Reach out to me by replying to this email, in the comments, or using the links below.

Stay Woke. I’ll see you living the dream.

Go kill all,

Devansh <3

Reach out to me on:

Instagram: https://www.instagram.com/iseethings404/

Message me on Twitter: https://twitter.com/Machine01776819

My LinkedIn: https://www.linkedin.com/in/devansh-devansh-516004168/

My content:

Read my articles: https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Get a free stock on Robinhood. No risk to you, so not using the link is losing free money: https://join.robinhood.com/fnud75