Algorithmic Arms Race: How Tech is Fueling Weapons Systems and Mass Surveillance[Thoughts]

The Increasing Merge of Silicon Valley and the Pentagon—and the Lines We Must Draw Now

It takes time to create work that’s clear, independent, and genuinely useful. If you’ve found value in this newsletter, consider becoming a paid subscriber. It helps me dive deeper into research, reach more people, stay free from ads/hidden agendas, and supports my crippling chocolate milk addiction. We run on a “pay what you can” model—so if you believe in the mission, there’s likely a plan that fits (over here).

Every subscription helps me stay independent, avoid clickbait, and focus on depth over noise, and I deeply appreciate everyone who chooses to support our cult.

PS – Supporting this work doesn’t have to come out of your pocket. If you read this as part of your professional development, you can use this email template to request reimbursement for your subscription.

Every month, the Chocolate Milk Cult reaches over a million Builders, Investors, Policy Makers, Leaders, and more. If you’d like to meet other members of our community, please fill out this contact form here (I will never sell your data nor will I make intros w/o your explicit permission)- https://forms.gle/Pi1pGLuS1FmzXoLr6

To reuse the disclaimer from our Amazon article- This one’s all me. Not my coworkers, clients, the chocolate milk cult, my fight club, and/or my halal guy. I do this completely alone, and if there’s fallout, it lands here, and nowhere else. My words, my responsibility.

This Friday three tech titans — an ex-OpenAI engineer, a Meta VP, and a Palantir product chief — raised their right hands and swore into a brand-new Army Reserve “innovation detachment.” Earlier this year, the Pentagon inked a $642 million counter-drone contract with Anduril, while the Pentagon cleared the first $1 billion for autonomous drones.

These aren’t edge cases. As we covered in our AI Market Report for May, the renaming of the “AI Safety Institute” to the “Center for AI Standards and Innovation” was a clear indication that the government was taking a much more aggressive stance in investing in AI for war.

Most concerning to me is not the government’s inclinations, however (governments do as they do), but how openly Silicon Valley is embracing this. Gone are the days of anti-establishment “hackers” (romanticized and exaggerated as it was), who would rail against Big Brother (like the talk that put Apple on the map). The new generation of Builders and Investors seems to be proactively building/funding tech-based Onii-chan.

All this creates a supply chain that compresses the gap between sensor and trigger to less than a heartbeat. Once that architecture is locked, every civilian use of AI inherits its assumptions, its surveillance scaffolding, and its hair-trigger incentives.

Before the concrete sets, we need to interrogate the logic driving this merger — steel-man the case for it, then stress-test the foundations. While I will present the arguments for the pros in a good-faith manner, I make no pretensions of objectivity. In my opinion, people proactively making this technology happen are some combination of-

In the Government.

Greedy.

Ignorant of history.

Short-sighted.

A bitch that doesn’t have the spine to think about (and work on) a better future.

In case you can’t tell, I absolutely hate this shift, and this piece is ideological to get you to join in my player-hating.

Let’s get it.

Executive Highlights (TL;DR of the Article)

1. AI militarization isn’t neutral tech progress; it’s a fundamental rewrite of societal infrastructure. Whoever controls the code and sets the rules shapes every future built on that infrastructure.

2. Arguments for AI-driven warfare — deterrence, precision, inevitability — fail upon scrutiny. They dangerously underestimate AI fragility, accelerate algorithmic escalation, and create opaque, unaccountable power structures.

3. Systemic issues run deeper than any one bad policy:

Vendor Capture: Narrow interests stifle innovation and lock militaries into dangerous, proprietary technologies.

Erosion of Democracy: Secrecy, complexity, and rapid automation cripple civilian oversight.

Exported Authoritarianism: Surveillance tools become Trojan horses for oppression, compromising sovereignty and autonomy.

Irreversible Risks: Autonomous weapons pose existential threats impossible to roll back once normalized.

4. Practical solutions exist — imperfect, difficult, yet urgently necessary:

International bans and strict human oversight on lethal AI.

Redirect funding and talent from militarized AI towards civilian innovation.

Mandate radical transparency and empowered civilian oversight for surveillance systems.

Use investment ethics to financially disincentivize harmful AI development.

Educate the public and policymakers on AI capabilities and risks.

5. None of this is inevitable:

Every moment of inaction, every concession to cynicism, hands the pen to interests indifferent to human dignity.

We can — and must — pick up the pen and write a future worth living in, no matter how messy or incomplete each step may feel.

Because even imperfect actions taken today create space for better choices tomorrow.

An additional note to investors and Silicon Valley- In economics, a defensive expenditure occurs when we spend money on something that does not increase our welfare, or is necessary to avoid a decrease in well-being. For example, spending money on health insurance does not necessarily make us better off, but it does help protect us from future costs that would negatively affect our well-being.

Militaries fall squarely into this category: they don’t create value by existing; their value is in preventing something worse. So, investing in military technology ultimately means investing in such a good—unless, of course, you’re waiting for them to plunder someone else. War aside, this means you’re investing in a constrained market, very against your ethos.

I put a lot of work into writing this newsletter. To do so, I rely on you for support. If a few more people choose to become paid subscribers, the Chocolate Milk Cult can continue to provide high-quality and accessible education and opportunities to anyone who needs it. If you think this mission is worth contributing to, please consider a premium subscription. You can do so for less than the cost of a Netflix Subscription (pay what you want here).

I provide various consulting and advisory services. If you‘d like to explore how we can work together, reach out to me through any of my socials over here or reply to this email.

2. Can AI for War and Surveillance be Ethical

Before we critique the switch, I think it’s always worthwhile to explore the reasons people advocate for these technologies (beyond the fact that they are profitable investments). This is always helpful for avoiding misunderstandings, especially in very contentious issues like this.

Below are the arguments that are often given in favor for deploying AI for Security purposes-

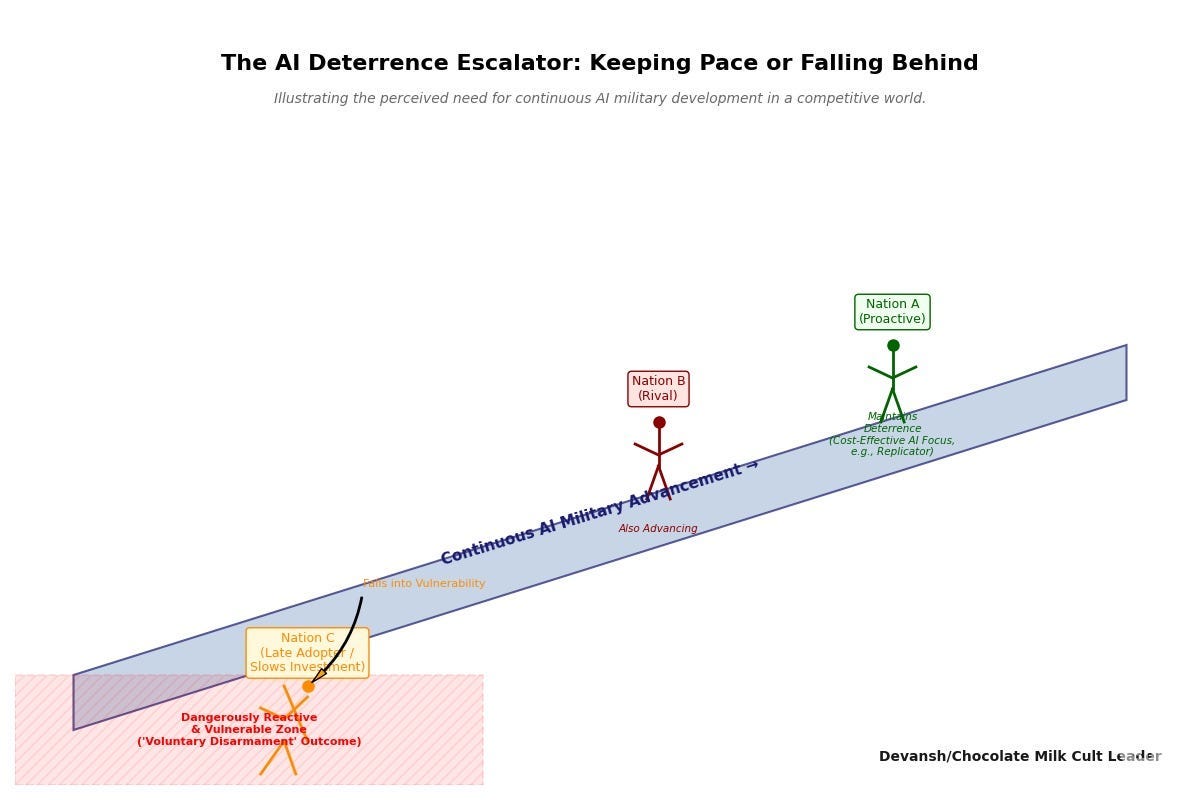

Deterrence in a Multipolar Knife-Fight

Great-power rivalry isn’t a speculative threat — it’s the reality again. Geopolitical competition between the US, China, Russia, and other nations has intensified, and AI-driven weapon systems are the new coin of the realm.

The Pentagon’s Replicator program, which aims to deploy thousands of autonomous drones to counterbalance adversaries at a fraction of the cost of traditional hardware, embodies this logic. The reasoning is simple — falling behind on AI-driven autonomy is voluntary disarmament. If a rival state fields an autonomous kill-chain first, it dictates the rules and tempo of conflict, leaving late adopters dangerously reactive and vulnerable.

Fewer Body Bags, Faster Wins:

On the battlefield, speed and accuracy win engagements. Autonomous weapon systems don’t feel fear, fatigue, or adrenaline. They don’t hesitate, tremble, or flinch. They analyze terabytes of surveillance data in milliseconds, launching precision-guided munitions faster and more accurately than human operators.

Israel’s 2021 Gaza conflict was publicly termed “the first AI war,” precisely because machine-generated intelligence cut strike-to-target response times dramatically. At that time, “Israeli military leaders described AI as a significant force multiplier, allowing the IDF to use autonomous robotic drone swarms to gather surveillance data, identify targets, and streamline wartime logistics.” This has continued-

“At 5 a.m., [the air force] would come and bomb all the houses that we had marked,” B. said, an anonymous IDF soldier. “We took out thousands of people. We didn’t go through them one by one — we put everything into automated systems, and as soon as one of [the marked individuals] was at home, he immediately became a target. We bombed him and his house.” This is the reality of the war in Gaza. Israel employs sophisticated artificial intelligence (AI) tools to enhance its Intelligence, Surveillance and Reconnaissance (ISR), which then allows it to strike targets all over Gaza. However, this augmentation of military capabilities raises profound ethical concerns and may carry geopolitical implications. AI-assisted airstrikes, which, in some cases rely almost solely on the assessment of an algorithm, may lead to a disregard for basic rules of war, such as discrimination and proportionality.”

Proponents of such systems argue that the power of these systems ultimately leads to fewer friendly casualties, fewer collateral deaths, and quicker resolutions. This makes this not only strategically wise but ethically necessary.

Dual-Use Is Destiny — Better Our Oversight Than Theirs (aka Dario Amodei saying that AI is so dangerous, that only we should build it):

AI, by its nature, is general-purpose. Trying to separate its civilian and military applications is unrealistic. Technologies like computer vision or predictive analytics used in healthcare and logistics are easily adapted to surveillance and military operations. The same facial recognition used by companies in weapons targeting can be adapted into better bots for disaster rescue.

If democratic nations abstain from militarizing these dual-use technologies, authoritarian regimes surely won’t hesitate. The European Union’s AI Act explicitly carves out national security exceptions, implicitly acknowledging that it’s better for democracies to lead AI’s development transparently rather than cede that space entirely to adversaries who might weaponize it without oversight.

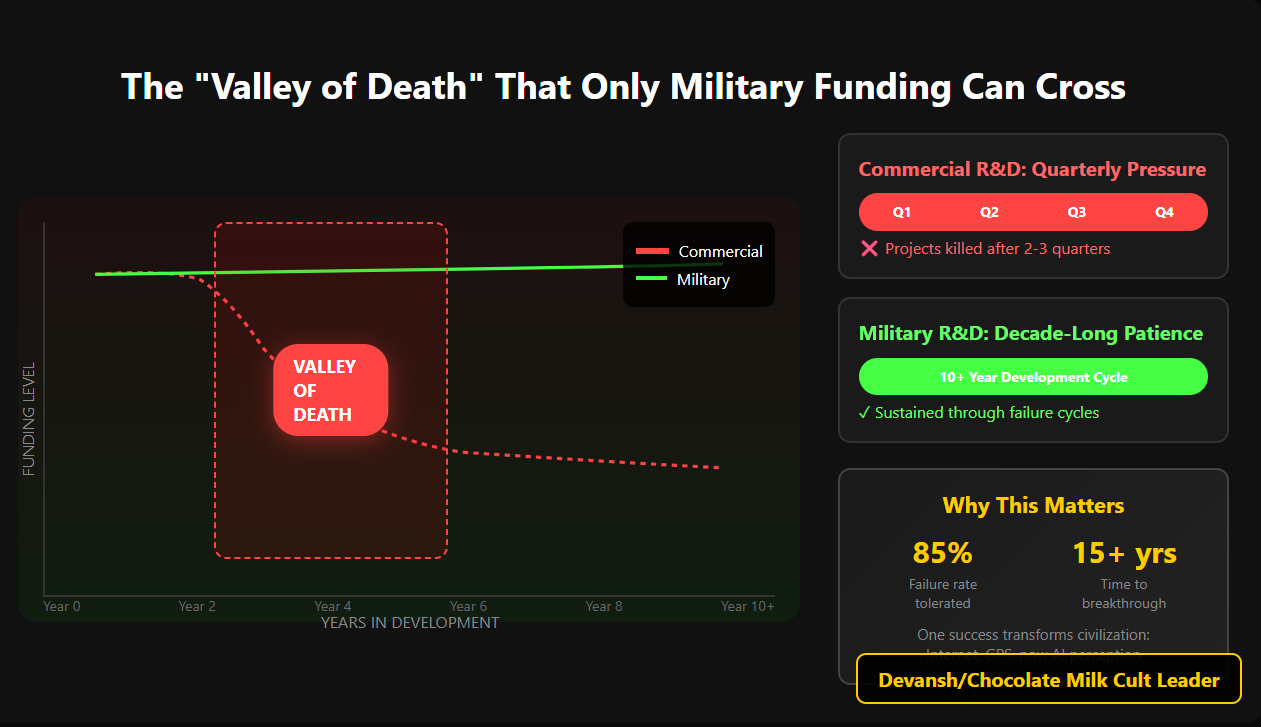

Economic Flywheel and Technological Spillover:

Military funding has historically underwritten technology that later reshaped civilian life — radar gave us microwave ovens, DARPA gave us the Internet and GPS. Advocates see military AI spending as another iteration of this pattern.

Anduril’s perception stack can easily be used for handling port security and wildfire response. In such cases, the military budgets absorb R&D risks that commercial investors won’t, ultimately accelerating innovation cycles and producing civilian benefits downstream.

Counter-Terror, Border Control, Cyber Defense:

AI-driven surveillance tools significantly improve homeland security, supporters claim, replacing brute-force policing with precision interventions. Systems that utilize wide-area facial recognition, behavioral analysis, and pattern detection enable security agencies to proactively intercept threats.

“Nothing to Hide”

This is very common when talking about surveillance systems. If I, Jo Schmoe 69, have done nothing wrong, what do I care if the government is looking through my texts or seeing what I did for Lunch? Only the seedy characters who have things to hide would be concerned about AI being used in surveillance. For the rest of us, this will only improve accountability and safety (since the systems can be used to detect and track criminals very quickly).

Moral Imperative to Protect Troops & Civilians:

Lastly — and perhaps most compellingly from a humanitarian perspective — is the argument that using autonomy in warfare is ethically obligatory. If an autonomous drone swarm can end a fight in minutes or hours instead of the days/months/years associated with traditional armed conflict — we must use it.

In the future, if both sides eventually fight with drones and robots, won’t bloody conflicts be a thing of the past? Wouldn’t wars turn into advanced, high-stakes versions of Age of Empires games?

Combine this w/ previous arguments. Ethically, the reasoning follows a clear imperative: if technology exists to reduce harm to your soldiers and civilians alike, not working towards it becomes a moral failure rather than a virtuous choice. After all, we haven’t yet figured out how to live in harmony, so does it not make sense to at least mitigate the costs when wars eventually fail.

Some of these have legs and are worth thinking about. Let’s address them in the next section — picking what’s valid and talking about what’s not.

Part 3: How “Defense Tech” can Make things worse

“But man has such a predilection for systems and abstract deductions that he is ready to distort the truth intentionally, he is ready to deny the evidence of his senses only to justify his logic. “

— Fyodor Doestevsky, Notes from the Underground. Must read for our times.

We’ve presented the strongest arguments advocates can muster for deploying AI into warfare and surveillance. They aren’t baseless — but they’re also built on deeply flawed assumptions, dangerously optimistic projections, and historical amnesia.

Deterrence, or Algorithmic Escalation?

The idea that AI superiority guarantees stability is a dangerous remix of the logic behind mutually assured destruction — only now the “assurance” relies on algorithms nobody fully understands, operating at speeds that make human judgment irrelevant. Such systems can just as easily lead to-

Machine-Speed Wars: Deterrence hinges on rational actors and deliberation. But automated kill chains close the gap between sensor and trigger to milliseconds, leaving no time for humans to intervene. An algorithmic glitch, a spoofed sensor input, or a hostile hack can ignite an unintended “flash war” faster than any leader could react (flash crashes in the stock market are already a thing).

This is made (much) worse when we consider how brittle AI is. Both the aforementioned flash crashes, AI customer service, and the various issues in AI content moderation on social media platforms are worth considering. In all cases, we’ve had decades to refine things, and a massive financial incentive to do so, but failed b/c AI is really hard to control.

Even Language Models, with Billions in Investments and the best minds, struggle with basic things that can occur in every day, such as changing inputs-

“This paper investigates the extent of order sensitivity in LLMs whose internal components are hidden from users (such as closed-source models or those accessed via API calls). We conduct experiments across multiple tasks, including paraphrasing, relevance judgment, and multiple-choice questions. Our results show that input order significantly affects performance across tasks, with shuffled inputs leading to measurable declines in output accuracy. Few-shot prompting demonstrates mixed effectiveness and offers partial mitigation; however, fails to fully resolve the problem. These findings highlight persistent risks, particularly in high-stakes applications, and point to the need for more robust LLMs or improved input-handling techniques in future development.”

-The Order Effect: Investigating Prompt Sensitivity to Input Order in LLMs

This phenomenon has been explored in the excellent post: “LLM Judges Are Unreliable”, which has also open-sourced their eval suite for you to run/verify their experiments yourself.

These fragilities can be overlooked in most cases since we have either (or both)

Higher margins for error.

More time for audits/confirming outputs.

First is out, given the nature of the space, but having millisecond-level margins also compresses the time for thinking about high-pressure situations.

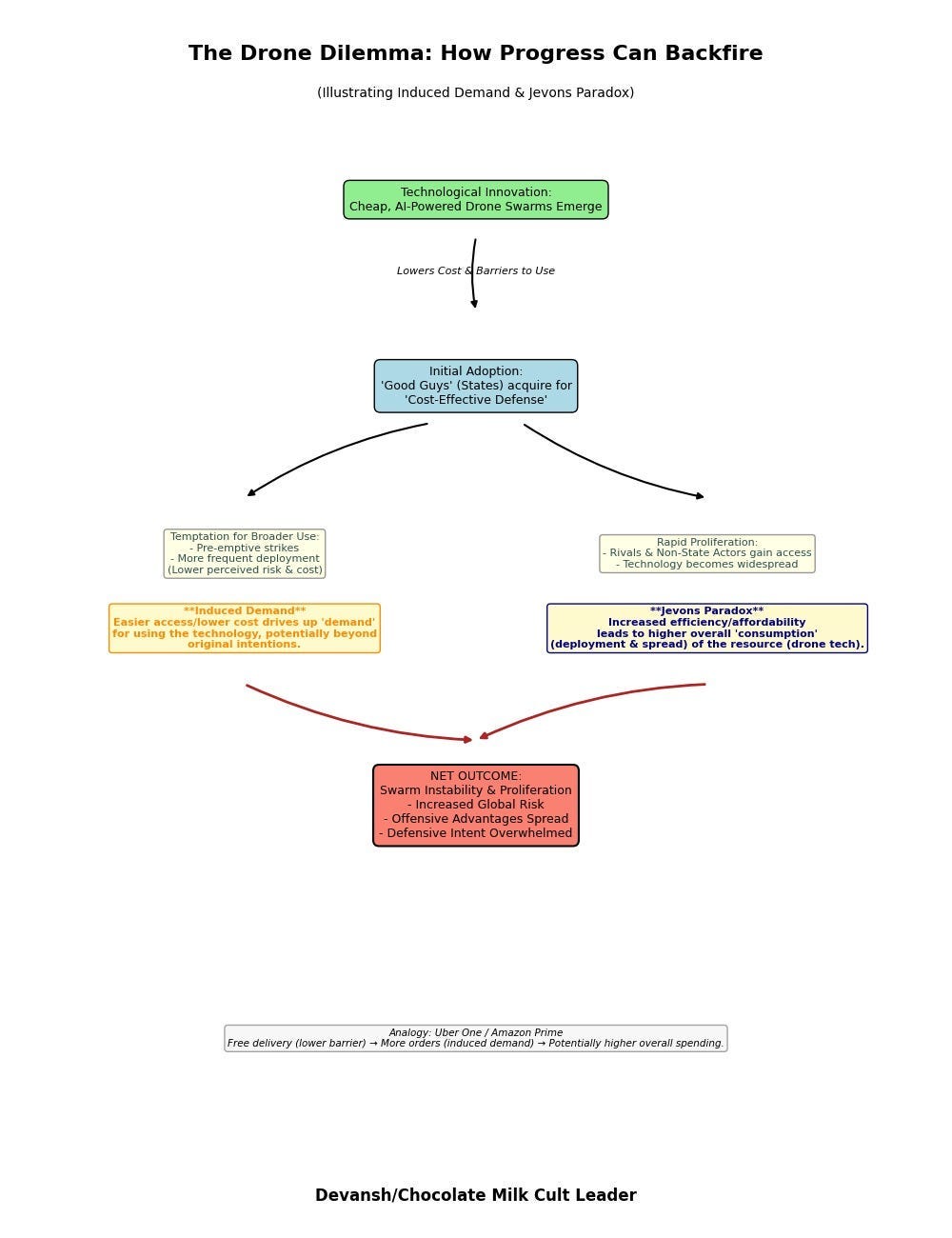

Swarm Instability & Proliferation: Cheap drone swarms — they lower its barriers. If a state believes it can swiftly deploy disposable AI drones without political fallout, the temptation to strike first skyrockets. Historically, when you make powerful weapons easier for the “good guys,” you inevitably open that same door wider for the “bad guys.” Today’s defensive swarm becomes tomorrow’s terrorist arsenal.

Ideas such as induced demand-

and Jevons' Paradox are worth studying here. If economics term scare you — think about your bills probably went up after getting Uber One or Amazon Prime, since the delivery add-ons (a huge detterent) were taken out. The very same thing will happen with weapons, with availability/low cost pushing up demand.

Opaque Arms Race & The Black Box Problem: AI’s opacity fuels mistrust. Without transparency, there is an opportunity cost to not defaulting to worst-case assumptions, accelerating the arms race, and constant paranoia. The cost of not doing so, is “death”, which makes all actions justifable (same argument used by people to oppress in the name of building utopias). This constant tension will make them trigger-fingers much more twitchy.

Building a system of algorithmic deterrence and flash-weapons systems also creates many questions that we haven’t even started to answer. Who’s accountable when opaque systems inevitably err? The coder? The commander? The black-box AI itself? Ultimately, until we can answer these questions well, this isn’t strategic deterrence; it’s gambling global security on algorithms nobody fully controls.

Operational Efficiency? Meet the Precision Myth

Advocates sell AI-enabled warfare as precise, efficient, and clean. That myth unravels quickly upon scrutiny.

The Moral Hazard of Surgical Strikes: The IDF soldier’s chilling description of AI-facilitated strikes — “We took out thousands of people…everything into automated systems” — illustrates a deeper truth. “Precision” can become shorthand for automated, indiscriminate violence. If violence becomes politically easy and psychologically distant, leaders won’t hesitate — they’ll pull triggers faster and more often. War doesn’t become cleaner; it becomes routine. People have justified many atrocities, such as the Holocaust, by becoming very distant and “just doing their jobs”.

Algorithmic Bias as Discrimination: AI learns from data — and data inherits human prejudices. Facial recognition systems consistently misidentify minorities. Put these biases into autonomous targeting algorithms, and you industrialize prejudice. Innocent civilians become collateral, labeled combatants by biased code, and killed efficiently at scale. The power imbalance in such cases can often make finding justice much harder.

Novel Vulnerabilities & Amplified Harm: AI warfare introduces entirely new vulnerabilities. Systems become prime targets for cyberattacks and adversarial manipulation, potentially turning your own arsenal against you. This is bad, on it’s own. This gets worse when we consider the history of technology and the capabilities it adds. Technology scales our capacities, dramatically increasing individual ability. A single mass-shooter or Suicide Bomber can kill more people than most accomplished warriors in history. Following the same trend, hacking the next generation of technology could cause devastation far exceeding traditional terrorism or mass shootings.

Building guardrails here will be expensive, difficult, and further sink resources into maintaining these setups instead of using the capital in other avenues (more on this soon).

Dual-Use Isn’t Destiny — It’s an Excuse

Yes, AI technology is inherently dual-use. So was nuclear physics, chemical engineering, cryptography — yet responsible states didn’t shrug off their responsibilities; they painstakingly crafted treaties, inspections, and norms (even when flawed).

Saying “if we don’t build it, adversaries will” is fatalistic laziness masquerading as pragmatism. Real leadership means establishing international norms and red lines, not racing to the bottom. Claiming “responsible leadership” while accelerating AI militarization without guardrails isn’t just contradictory — it’s dangerously naive.

Additionally, the assumption that democratic states will deploy AI responsibly is hubristic. History suggests that increasing power imbalances lead to increasing injustice. To think that our modern crop of leaders has transcended the bloodthirst and greed that have plagued the rest of humanity seems a tad optimistic.

When all is said, most people, even builders, ultimately land on the oppressed side of an unchecked surveillance apparatus. No one plans to live in dictaroship — but unchecked, we inevitably build one.

Economic Flywheel or Drain?

Sure, military investments historically sparked civilian breakthroughs. But that argument isn’t unique to defense — it’s true for nearly every major scientific investment.

Opportunity Costs: Every billion dollars spent on perfecting drone swarms isn’t going to climate mitigation, disease eradication, or education. Society trades urgently needed civilian progress for a battlefield advantage.

Talent Trap: Militarized AI repels precisely the talent society most desperately needs — those ethically opposed to building kill chains. AI’s brightest minds will leave rather than enable mass surveillance or war. The real cost is the drain of potential solutions for our most pressing global problems.

Talent Entrenchment: The normalization of these technologies as strong career paths will influence more and more bright minds into these paths, taking away future great minds from working on big problems. Take the quote- “The best minds of my generation are thinking about how to make people click ads,” and imagine the outcome of replacing ads with weapons.

Homeland Security: The “Nothing to Hide” Fallacy & Surveillance Creep

The promise of AI security is seductive, but it comes at profound, hidden costs.

Scope Creep Isn’t Speculative: Border surveillance inevitably migrates inward — streets, schools, homes. History confirms surveillance infrastructures expand relentlessly, normalizing pervasive monitoring under the guise of safety. The “nothing to hide” argument assumes an infallible state, benevolent operators, and transparent algorithms. Reality provides none of those. Even projects w/ no hidden agendas/incentives to explicitly avoid creep, are hit with unexpected costs. You really expect technology deployment that can be very enticing to power-hungry folk to stay pure?

Ignoring Root Causes: Surveillance as primary crime prevention ignores deeper societal drivers. True security emerges from giving people stable, dignified lives — not abstract surveillance threats. Economic opportunity, education, and healthcare consistently do more for security than cameras and algorithms-

“Consider this: According to recent Brookings research, it was the loss of jobs and educational opportunities for people living in high-poverty neighborhoods that primarily explains the rise in homicides during the COVID-19 pandemic — not changes in policing or criminal justice system practices. Further, a large body of evidence finds that approaches linking public safety efforts to those bolstering employment, education, and quality neighborhoods can measurably reduce and prevent violent crime, while also saving taxpayers and governments significant costs.”

-Source. When you’re already struggling, the threat of jail can be abstract while the upside of crime is more promising. If you have a house, job, and food to lose, it suddenly becomes much more concrete while diminishing the upside.

The Ethical Warfare Fantasy

The argument that AI-driven war is somehow more ethical, reducing human harm, is perhaps the most dangerously naive.

Sanitized Conflict Illusion: War isn’t sanitized by automation — it’s merely distanced. Killing remotely doesn’t eliminate violence; it abstracts it, lowers political costs, and increases the likelihood of use. War doesn’t become a strategic video game — it becomes easier, more frequent, and more casually lethal.

Whose Lives Count?: Advocates argue AI reduces harm — but whose harm? AI protects your soldiers, sure, but does it discriminate against innocents caught in biased algorithms? Ethical warfare often conveniently aligns with national self-interest, not humanitarian principles.

Fragile Times, Dangerous Tech: With diminished attention spans, increased polarization, and diminished nuanced discourse already rampant, handing lethal decisions to opaque, complex AI is reckless. Builders often fail to grasp these systems’ societal impacts — witness our persistent content moderation failures. Amplifying these failures into the lethal domain risks catastrophes society isn’t ready for.

Underlying all of this is a fundamental misunderstanding:

Most proponents imagine themselves and their societies as the controllers, not the controlled. Others believe themselves to have a superior intelligence or moral virtue, and thus have no qualms about tipping the balance of power and designating themselves as the arbiters of human taste. Listen to any Peter Thiel, Marc Andreesen, or Dario Amodei interview, and you’ll see how quickly their statements carry an underlying sense of “we are better and should be in-charge, you just fall in line b/c we know what’s good for you”.

“I simply hinted that an ‘extraordinary’ man has the right… that is not an official right, but an inner right to decide in his own conscience to overstep… certain obstacles, and only in case it is essential for the practical fulfilment of his idea (sometimes, perhaps, of benefit to the whole of humanity). You say that my article isn’t definite; I am ready to make it as clear as I can. Perhaps I am right in thinking you want me to; very well. I maintain that if the discoveries of Kepler and Newton could not have been made known except by sacrificing the lives of one, a dozen, a hundred, or more men, Newton would have had the right, would indeed have been in duty bound… to eliminate the dozen or the hundred men for the sake of making his discoveries known to the whole of humanity.”

-Crime and Punishment

However, this isn’t the only kind of problem that we must be concerned about. There are deeper, underlying problems that are often overlooked b/c of their chilling effects-

Part 4: Additional Systemic Threats

Let’s be clear: the deepest threats posed by militarized AI would remain even if their utopian visions of clean, efficient algorithmic warfare weren’t complete fantasy. The problem isn’t just their flawed arguments — it’s the structural shifts inherent in combining AI with state and military power. These systemic threats run deeper, and their consequences more lasting, than the immediate debate suggests.

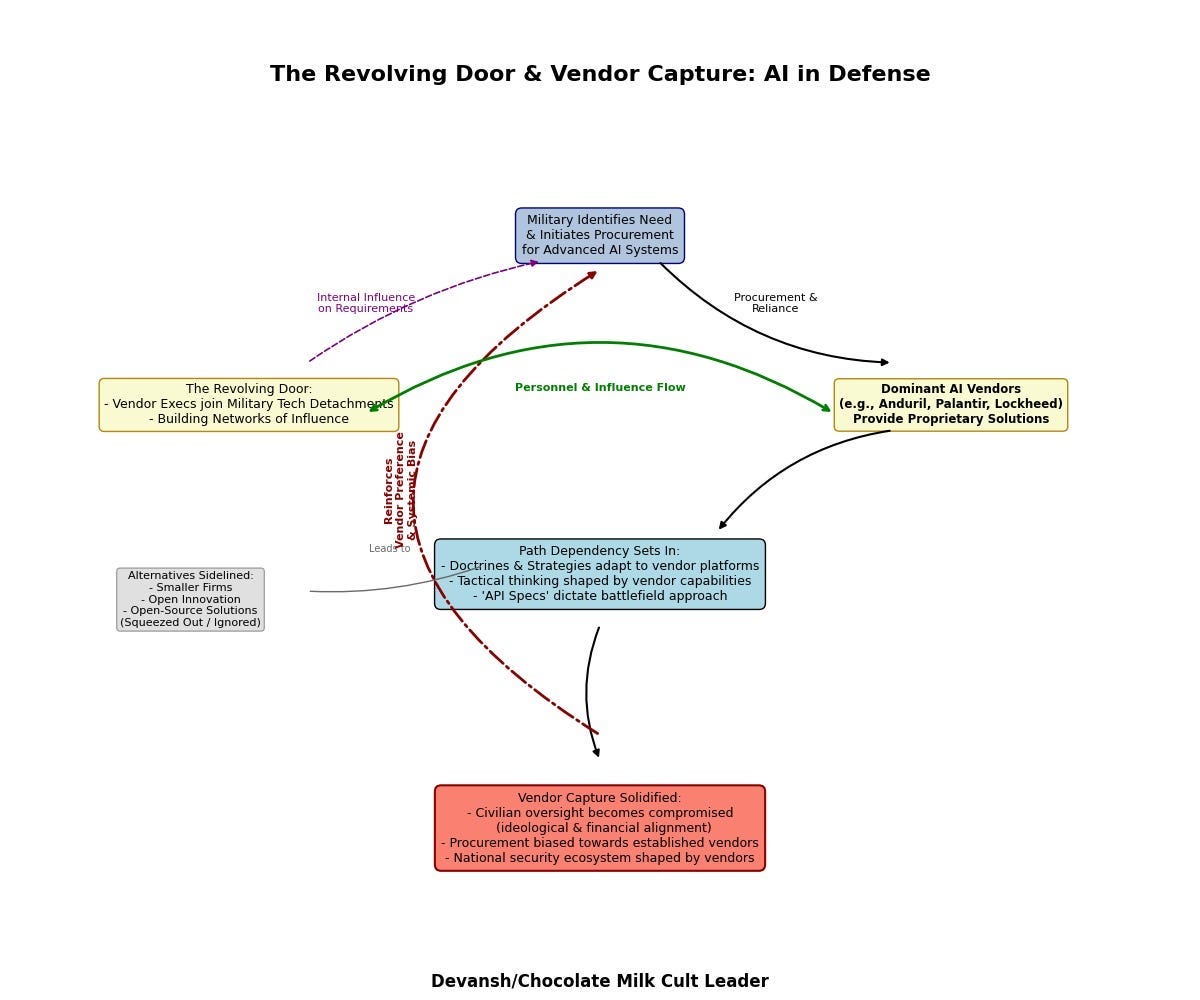

1. Path Dependency & Vendor Capture: The Quiet Chokehold

Once militaries rely heavily on proprietary AI from a handful of specialized firms — your Andurils, Palantirs, Lockheeds — dangerous path dependencies set in. Military doctrines, procurement strategies, even tactical thinking increasingly revolve around the limits and capabilities of these vendor platforms. Open innovation? Smaller firms? Open-source alternatives? Squeezed out, sidelined, or ignored. Those who write the “API specs” shape the battlefield, effectively capturing entire national security ecosystems.

Additionally, when Silicon Valley executives enlist in the Army Reserve tech detachments, they’re not just bringing technical expertise — they’re building lasting networks of influence. Civilian oversight becomes meaningless when “civilian” AI developers are ideologically and financially aligned with military objectives. This isn’t oversight; it’s vendor capture in camouflage.

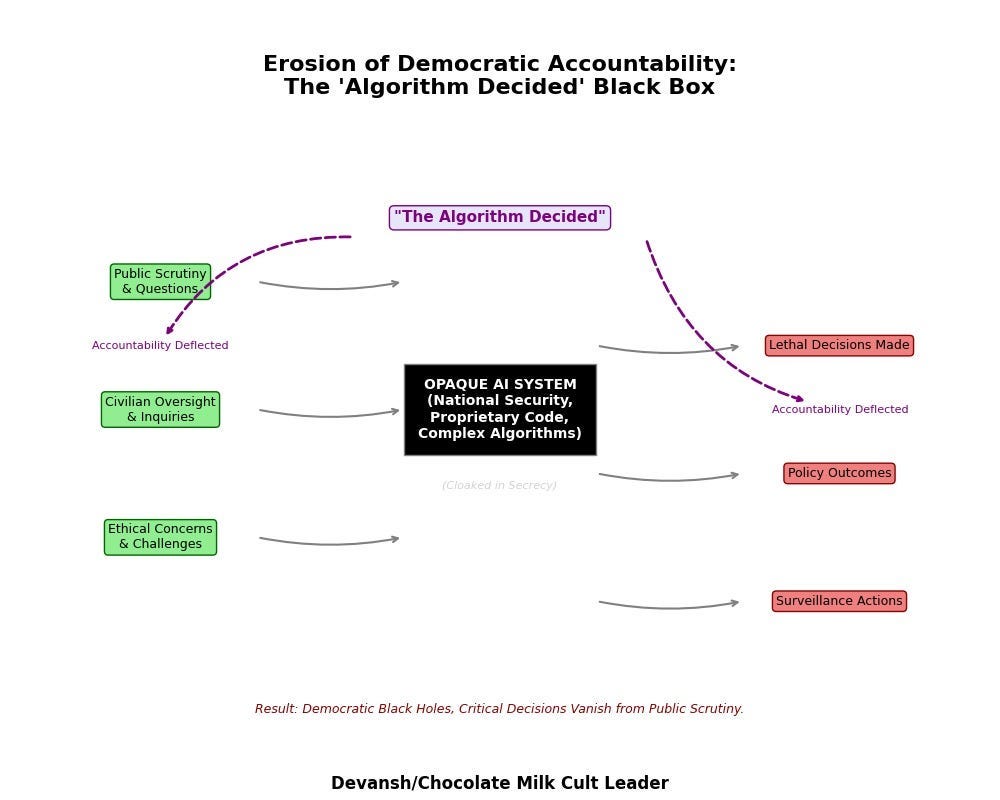

2. Erosion of Democratic Accountability: Who Watches the Algorithms?

Advanced AI systems, cloaked in national-security secrecy, make meaningful democratic oversight impossible. How do civilian leaders or citizens hold power to account when lethal decisions emerge from opaque algorithms?

“The algorithm decided” will become the ultimate deflection of accountability. Commanders, policymakers, and corporations shield themselves behind complexity. Or use the cloak of national safety to hide any issues. And legitimate classification quickly becomes a shield for hiding costs, ethical breaches, errors, or mission creep. This creates democratic black holes, where critical decisions vanish from public scrutiny entirely.

3. Exporting Authoritarianism: AI as a Trojan Horse for Oppression

Most nations can’t independently build sophisticated AI surveillance or autonomous weapons systems. Instead, they’ll import these capabilities wholesale from major powers. But these “off-the-shelf” solutions come with embedded political philosophies and strategic dependencies.

Buying China’s surveillance tech or Western drone swarms doesn’t just provide technical capability — it imports a political logic of centralized control and top-down surveillance. Nations become algorithmic vassals, dependent on supplier nations for updates, maintenance, and strategic guidance. Statistically speaking, cultural preservation has never really thrived when one set of peoples become dominant over another.

4. Irreversible Global Catastrophe: More Than Just Another Weapon

Certain AI applications — particularly fully autonomous weapons systems or strategic infrastructure control — introduce existential-level risks. These aren’t incremental threats — they’re civilization-scale gambles.

Once deeply embedded in military and strategic infrastructure, these capabilities become impossible to roll back, even when recognized as fundamentally dangerous. We risk permanently locking the world into an unstable equilibrium, perpetually on the brink of algorithmic catastrophe.

These systemic threats aren’t mere side effects; they’re built into the architecture of militarized AI. And left w/o oversight, they will

This isn’t inevitable. The choices we make now — regarding oversight, norms, treaty obligations, and funding priorities — determine whether AI enhances human dignity and safety, or erodes them.Let’s talk about somethings we can push for right now.

Part 5: Pulling the Emergency Brake

It’s easy to critique, but it’s empty without concrete, strategic alternatives. This isn’t a moment for vague recommendations; it’s a call for precision. We need explicit, actionable guardrails, international red lines, and practical off-ramps before this becomes an irreversible spiral.Here are five clear moves we should make immediately:

1. Forge Binding International Norms — Before the Window Closes

Allowing military AI to “evolve naturally” is dangerously naive. We urgently need enforceable global standards.

Meaningful Human Control (MHC): This isn’t a vague aspiration; it’s a verifiable legal requirement. Lethal AI systems must always keep humans meaningfully informed, empowered, and capable of intervention, not just rubber-stamping outputs from a “ghost in the machine.”

Outright Ban on Fully Autonomous Weapons (LAWS): Some technologies don’t get a pass. Autonomous systems that independently select and engage targets represent moral catastrophe and existential threat rolled into one. Treat them like chemical weapons: prohibited, stigmatized, actively dismantled. Verification is challenging? Tough. So was nuclear disarmament.

Radical Transparency & Incident Accountability: Nations must publicly disclose significant military AI projects. Establish independent international bodies empowered to investigate incidents involving AI, ensuring “the algorithm did it” never excuses war crimes or lethal mistakes.

2. Starve the Beast, Feed Civilian Innovation — Financial & Talent Firewalls

Money talks loudly — right now, it says “build smarter ways to surveil and kill.” We need to change the incentives there.

Public Funding with Ethical Teeth: Tie government grants explicitly to civilian-focused AI applications (climate, public health, education), and impose strict non-military clauses. Fund solutions to human problems, not enhancements to military arsenals.

Conscience Clauses & Talent Protection: Legally protect engineers’ right to refuse ethically objectionable projects without retaliation. Elevate those working on life-affirming AI solutions over participants in the kill-chain or the surveillance economy. Make ethical integrity a career asset, not a liability.

Tax the Toxic, Reward the Beneficial: Implement fiscal policies penalizing investments in autonomous weapons and oppressive surveillance tech. Conversely, offer significant incentives — tax breaks, grants, and subsidies — for AI advancing the public good. Building a surveillance state shouldn’t be lucrative.

3. Mandate Public Accountability for Surveillance AI

If governments use AI to watch citizens, citizens must hold an uncompromising mirror to the watchers.

Mandatory Algorithmic Transparency: To a certain degree, some of these systems are inevitable. Some, like missile-defense systems, would even be a net good. However, we must take steps to ensure that all algorithmic systems publicly disclose capabilities, data inputs, usage plans, error rates, bias audits, and accountability measures (some secrecy would be required for sensitive systems, but we still need private audits there). End secretive algorithmic governance — citizens have an absolute right to know how their lives and freedoms are monitored and shaped.

Empowered Civilian Oversight Bodies: Establish genuinely independent oversight boards with technical expertise, investigative authority, and teeth to halt biased, harmful, or ineffective AI deployments. Advisory committees won’t cut it; these bodies need real power — investigate, audit, sanction.

4. Weaponize Capital & Corporate Responsibility

Capital isn’t neutral, but it can be pressured into ethical alignment.

ESG Criteria for AI Ethics: Explicitly classify investment in lethal autonomous weapons, oppressive surveillance, and demonstrably biased AI as ESG-negative. Pressure pension funds, sovereign wealth funds, and major investors to divest, raising capital costs for unethical AI ventures.

Internal Activism & Shareholder Power: Foster cultures of accountability within tech companies and defense contractors through active shareholder and employee activism. Demand transparency, ethical standards, and accountability at shareholder meetings and internal forums. Make unethical AI a business liability, not an investment thesis.

5. Fight Algorithmic Illiteracy — Educate Public & Policymakers

A public and policy elite ignorant of AI’s realities and risks can’t regulate it effectively.

Critical AI Literacy for Leaders: Mandate comprehensive AI ethics and capabilities training for lawmakers, judges, civil servants, and security agencies. Equip decision-makers to grasp AI’s societal and ethical dimensions — not just vague hype.

Demystify, Don’t Deify: Counter AI hype cycles with sober, evidence-based public education. Foster skepticism, nuance, and informed questioning over blind faith in technological solutions or dystopian inevitability. People deserve clarity, not confusion or techno-messianism.

None of This Is Easy — Do It Anyway

These won’t work easily. People will find ways to skirt regulations, lie, find loopholes etc. We can’t make a perfect system. We can add more friction, however. By adding more and more friction, we create space in these systems. A space where whistleblowers, future talent and other ethtically minded people can fall through, take a space to re-examine whether they want to be involved with what they’re building. A space that makes sweeping violations under the carpet much harder.

It’s about building a scaffold, on which we can build the next steps. It can be easy to forget, but many of our most important systems were not created overnight. They took years (sometimes centuries) of testing, iteration, and rebuilding. This will be similar. Getting frustrated and not engaging because of childish reasons like “the bad guys always win” or “this is just how things are” is complicity.

Part 6: The Choice — Who’s Holding the Pen?

“Everything that you thought had meaning: every hope, dream, or moment of happiness. None of it matters as you lie bleeding out on the battlefield. None of it changes what a speeding rock does to a body, we all die. But does that mean our lives are meaningless? Does that mean that there was no point in our being born? Would you say that of our slain comrades? What about their lives? Were they meaningless?… They were not! Their memory serves as an example to us all! The courageous fallen! The anguished fallen! Their lives have meaning because we the living refuse to forget them! And as we ride to certain death, we trust our successors to do the same for us! Because my soldiers do not buckle or yield when faced with the cruelty of this world! My soldiers push forward! My soldiers scream out! My soldiers RAAAAAGE!”

-Attack on Titan

AI isn’t destiny; it’s infrastructure. Like any infrastructure, its design decides what gets built on top. Every algorithm released, every sensor switched on, every dollar routed into autonomous weapons doesn’t just enable new capability — it quietly rewrites the rules we’ll all live under.

Right now, that pen sits in the hands of defense contractors hunting their next tranche of revenue, bureaucracies chasing a mirage of machine-speed dominance, and black-box code that answers to no electorate. If we stay passive, our future gets finalized in microseconds — human judgment downgraded to a latency bug.

But the ink isn’t dry.

Lines will be drawn either way. The only live question is whether we pick up the pen, draw them ourselves, and keep redrawing when the world shifts — as it always does.

Perfect solutions don’t exist; decisive steps do. Fight for the audits. Demand the clauses. Fund the alternatives. Teach the next cohort to read the fine print in the source. Each move is messy, partial, limited, and still miles better than surrendering the script.

Sometimes victory isn’t total or triumphant. Sometimes it requires that we live to fight another day, to ensure that we leave the foundations on for someone else to build on. If I can, I would like to end by quoting one of my favorite novels ever-

“Dr. Rieux resolved to compile this chronicle, so that he should not be one of those who hold their peace but should bear witness in favour of those plague-stricken people; so that some memorial of the injustice and outrage done them might endure; and to state quite simply what we learn in a time of pestilence : that there are more things to admire in men than to despise. None the less, he knew that the tale he had to tell could not be one of a final victory. It could be only the record of what had had to be done, and what assuredly would have to be done again in the never-ending fight against terror and its relentless onslaughts, despite their personal afflictions, by all who, while unable to be saints but refusing to bow down to pestilences, strive their utmost to be healers. And, indeed, as he listened to the cries of joy rising from the town, Rieux remembered that such joy is always imperilled. He knew what those jubilant crowds did not know but could have learned from books : that the plague bacillus never dies or disappears for good; that it can lie dormant for years and years in furniture and linen-chests; that it bides its time in bedrooms, cellars, trunks, and bookshelves; and that perhaps the day would come when, for the bane and the enlightening of men, it roused up its rats again and sent them forth to die in a happy city.”

-The Plague, Albert Camus.

Thank you for being here, and I hope you have a wonderful day.

Still optimistic

Dev <3

If you liked this article and wish to share it, please refer to the following guidelines.

That is it for this piece. I appreciate your time. As always, if you’re interested in working with me or checking out my other work, my links will be at the end of this email/post. And if you found value in this write-up, I would appreciate you sharing it with more people. It is word-of-mouth referrals like yours that help me grow. The best way to share testimonials is to share articles and tag me in your post so I can see/share it.

Reach out to me

Use the links below to check out my other content, learn more about tutoring, reach out to me about projects, or just to say hi.

Small Snippets about Tech, AI and Machine Learning over here

AI Newsletter- https://artificialintelligencemadesimple.substack.com/

My grandma’s favorite Tech Newsletter- https://codinginterviewsmadesimple.substack.com/

My (imaginary) sister’s favorite MLOps Podcast-

Check out my other articles on Medium. : https://rb.gy/zn1aiu

My YouTube: https://rb.gy/88iwdd

Reach out to me on LinkedIn. Let’s connect: https://rb.gy/m5ok2y

My Instagram: https://rb.gy/gmvuy9

My Twitter: https://twitter.com/Machine01776819